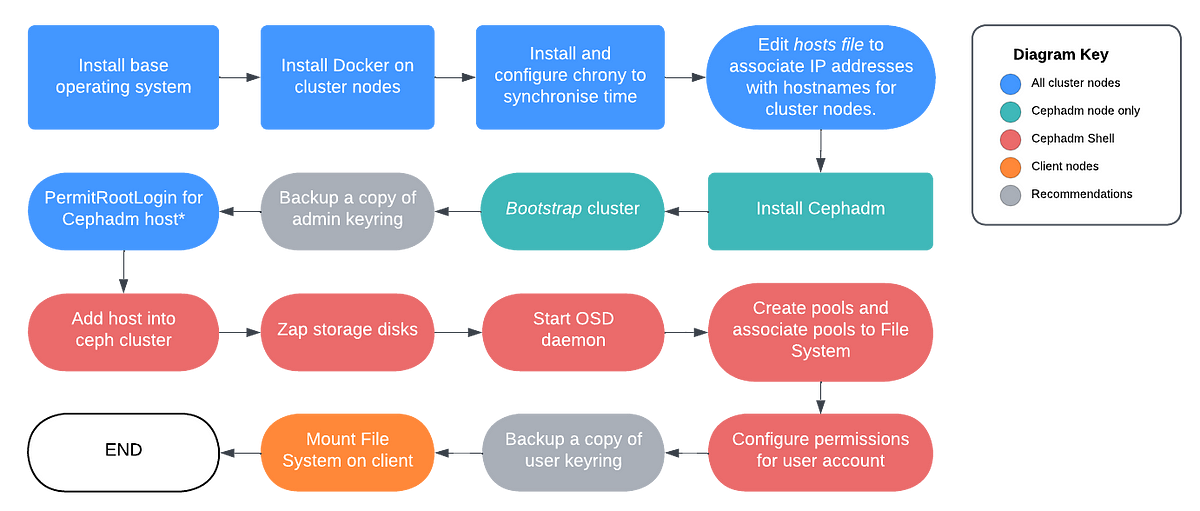

21 September, 2023 — Step-by-step instructions for efficiently deploying CephFS

CephFS Deployment for Dummies

Forewarning: Opting for a clean installation whenever possible is strongly recommended. Unless you are confident in your expertise, tinkering with different configurations can often lead to lingering issues and conflicts that may go unnoticed or be time-consuming to debug.

Context

CephFS (Ceph File System) is Ceph's POSIX-compliant, distributed file system. Built on top of Ceph's object storage, CephFS is one of many support storage solution of Ceph that allows users to mount a file system in a similar way to traditional network file systems like NFS. Besides CephFS, Ceph supports object storage through the S3-compatible RADOS Gateway (RGW) and block storage via RADOS Block Device (RBD).

Pre-requisites:

(a) Hardware: While CephFS is horizontally scalable, allowing storage expansion with additional devices, network infrastructure, CPU, and memory are often more critical for performance. Adequate bandwidth between nodes and robust hardware are key for efficient Ceph operations, especially as the cluster grows. For more details, refer to Ceph's official documentation on hardware requirements.

(b) Internet Connectivity: Required for downloading and installing Ceph packages and dependencies.

(c) Minimum of 3 nodes required for CephFS deployment.

(d) Networking: Ensure adequate network bandwidth between the cluster nodes for efficient data transfer. Consider using a dedicated network for Ceph traffic to minimize interference.

1. Install Base Operating System

Ceph supports several Linux distributions, including Ubuntu, CentOS, and Debian, but Ubuntu is highly recommended due to its stability and compatibility with Ceph. Personally, given how the documentation for Ceph is scarce at the time of writing, it is recommended to use Ubuntu where there's a bigger community support.

Additionally, if you need to bind ports or make other network-level configurations, such as adjusting firewall settings or setting up network interfaces, it should be done at this stage

2-3. Install Docker and Chrony

Chrony is a network time synchronization tool that helps keep the system clock accurate by synchronizing it with NTP servers, which is essential for distributed systems like Ceph to ensure all nodes operate on the same time reference.

In the provided code, we are using the pool.ntp.org NTP server for time synchronization, but you can choose which ever NTP provider you prefer.

EDITABLE EXAMPLE

# Install Docker curl -fsSL https://get.docker.com -o get-docker.sh && bash get-docker.sh # Install 'chrony' package for time synchronisation apt -y install chrony # Add Network Time Protocol (NTP) to chrony configuration # Replace <zone> with NTP server nearest to your node echo "pool <zone>.pool.ntp.org iburst" >> /etc/chrony/chrony.conf # Restart the chrony service to apply changes systemctl restart chrony

4. Map IP Addresses to Hostnames

Map IP addresses of cluster nodes to hostnames for easier name resolution

EDITABLE EXAMPLE

# Map IP address of cluster nodes to hostname sudo sh -c 'echo "10.0.0.0 host1" >> /etc/hosts' sudo sh -c 'echo "10.0.0.1 host2" >> /etc/hosts' sudo sh -c 'echo "10.0.0.2 host3" >> /etc/hosts'

5. Install Ceph using cephadm

cephadm is a management tool for deploying and managing Ceph clusters in a single command. In subsequent sections, we will also be using cephadm to issue commands to the Ceph cluster rather than managing the individual nodes directly.

Note: As of July 2024, quincy has reached end-of-life but the cephadm tool should be version agnostic.

EDITABLE EXAMPLE

# Download ceph files from Github Repository curl --silent --remote-name --location https://github.com/ceph/ceph/raw/quincy/src/cephadm/cephadm # Make 'cephadm' file executable chmod +x cephadm # Add a repository configuration for the "quincy" release ./cephadm add-repo --release quincy # Install cephadm on cluster node's system ./cephadm install # Updates location of the "cephadm" executable which cephadm >>> /usr/sbin/cephadm

6. Bootstrapping Cluster

Bootstrapping in cephadm helps to creating and initialise the core components of a new Ceph cluster such such as monitors and management daemons that are needed for the cluster to operate.

EDITABLE EXAMPLE

# Replace <Monitor-IP> with Cephadm host's IP Address # Replace <Clust-Network> with Ceph Cluster's Subnet Mask cephadm bootstrap --mon-ip <Monitor-IP> --cluster-network <Cluster-Network>

7. Backup Admin Keyring

⚠️ If the cephadm administrative keyring is lost, the Ceph cluster will become unmanageable, unless reinstalled from scratch. Ceph does not have built-in warning or protection against the deletion of the admin keyring. Maintaining a backup copy is thus crucial for safeguarding cluster integrity in a production environment.

The admin keyring and key configuration files can be found under /etc/ceph on the Cephadm host. You are free to choose the way you wish to backup the files within this directory. The simpler option is to utilise the scp or cp command to copy all the files within the directory onto a remote location.

8. Permit Root Login

The Cephadm host communicates with the cluster nodes via SSH and would require sufficient privileges to orchestrate and manage configuration, on the target nodes. The easiest way to achieve (though not the most secure) this is to configure root login on the Cephadm host. In production environments, it is recommended to follow the rule of least privilege.

Thereafter, SSH keys need to be copied from the Cephadm host to the remaining cluster nodes. The purpose of this is to enable passwordless SSH for Cephadm to manage your cluster nodes "automatically".

EDITABLE EXAMPLE

# Replace <IP-Address> with Cephadm host's IP Address echo "Match Address <IP-Address>" | sudo tee -a /etc/ssh/sshd_config echo " PermitRootLogin yes" | sudo tee -a /etc/ssh/sshd_config # Restart the SSHD Service sudo systemctl restart sshd # Generate SSH Keys using ssh-keygen, press ENTER till completion ssh-keygen # Copy ssh-keys to other hosts ssh-copy-id -f -i /etc/ceph/ceph.pub root@host1 ssh-copy-id -f -i /etc/ceph/ceph.pub root@host2

9. Add hosts/nodes to the Ceph cluster

Hosts in this context refer to cluster nodes. If you have a large number of cluster nodes, you can utilise a for loop in bash to automate this further.

EDITABLE EXAMPLE

# Replace <Host-Name> with cluster node's hostname # Replace <IP-Address> with the node's corresponding IP Address ceph orch host add <Host-Name> <IP-Address>

10-11. Zap Storage Disks

The zap is a command used to erase and prepare a storage device for use by Ceph. It is recommended to always run this command before starting the Object Storage Daemon (OSD) on the storage device as any existing data or storage partition may cause the OSD daemon to fail.

EDITABLE EXAMPLE

# Replace <Host-Name> with cluster node's hostname (as added above) # Replace <Device> with Device Directory ## Zap the storage device/disk and start the OSD daemon for the device ceph orch device zap <Host-Name> <Device> --force ceph orch daemon add osd <Host-Name>:<Device>

12. Create Pools as Associate Pools with File System

Creates a CRUSH rule named hdd-pool-rule that defines how data should be stored and replicated across the cluster

EDITABLE EXAMPLE

ceph osd crush rule create-replicated hdd-pool-rule default host hdd

Creates a new pool named cephfs.metadata for storing metadata associated with the Ceph File System. The parameters 256 256 specify the number of placement groups (PGs), which helps in balancing data distribution

EDITABLE EXAMPLE

ceph osd pool create cephfs.metadata 256 256 ceph osd pool create cephfs.default.data 256 256

Configures an erasure coding profile named 16-4-osd, where k=16 specifies the number of data chunks and m=4 specifies the number of coding chunks

EDITABLE EXAMPLE

ceph osd erasure-code-profile set 16-4-osd k=16 m=4 crush-failure-domain=osd ceph osd pool create cephfs.ec.data 128 128 erasure 16-4-osd ceph osd pool set cephfs.ec.data allow_ec_overwrites true

Initializes a new Ceph File System named cephfs1, using the previously created metadata and default data pools.

EDITABLE EXAMPLE

# Use 'cephfs.default.data' and 'cephfs.metadata' pool to create File System ceph fs new cephfs1 cephfs.metadata cephfs.default.data

Integrates the erasure-coded pool into the cephfs1 file system, allowing it to be used alongside the other pools for data storage

EDITABLE EXAMPLE

# Add EC pool separately into File System ceph fs add_data_pool cephfs1 cephfs.ec.data

Deploys three metadata servers (MDS) for the cephfs1 file system, with one active and two standby instances for ensures high availability and fault tolerance. Without an active MDS, clients cannot access or modify metadata, which means no read, write or recovery operation can be performed on files or directories in the CephFS.

Essentially, without standby MDSs, MDS becomes a single point of failure for your cluster.

EDITABLE EXAMPLE

# Start up 3 metadata service (1 active, 2 standby) ceph orch apply mds cephfs1 3

13-14. Configure Permissions for User Account

Authorize a new client user for the specified path in the file system. Replace <client_id> with your desired client identifier (e.g., alvin).

EDITABLE EXAMPLE

ceph fs authorize cephfs1 client.<client_id> / rw

Running the above command will output something that resembles the following:

EDITABLE EXAMPLE

[client.coin] key = AQDS28fdsfsadasNRAA4ZwsDWYRDfI2Zw+6Dji1Lg==

This key is used to access the Ceph File System and where necessary you can backup and share this generated key with the authorised user if you are the system administrator. If you lost it, the key can be regenerated or re-retrieved using ceph auth ls or ceph auth get client.alvin for individual users.

15. Mount CephFS on Client

Congratulations, you have successfully deployed a Ceph File System 🎉 ! All that is left to do is to mount the file system.

EDITABLE EXAMPLE

mount -t ceph 10.2.214.1:6789:/ /data/cephfs -o name=client.alvin,secret=AQDS28fdsfsadasNRAA4ZwsDWYRDfI2Zw+6Dji1Lg== setfattr -n ceph.dir.layout.pool -v cephfs.ec.data /data/cephfs/ec

If you need the mounting to be persistent across reboots, you can use modify the /etc/fstab file with the following:

EDITABLE EXAMPLE

10.2.220.4:6789,10.2.220.5:6789,10.2.220.6:6789:/ /data/ceph/cephfs/EC ceph name=alvin,_netdev,noatime,secret=AQDS28fdsfsadasNRAA4ZwsDWYRDfI2Zw+6Dji1Lg== 00 # Then trigger a mount with the following command mount -a

Afterword

Deploying a Ceph cluster is often regarded as the simplest part of the journey; the real challenge lies in determining the optimal configurations that align with your specific data types and requirements. This crucial step demands thorough research and careful consideration, as the right settings can significantly impact performance, fault tolerance, and overall reliability.

Over the past three months, I have rigorously tested the performance of Bitdeer's Ceph cluster along side several other engineers. Our aim was to establish a robust and fault-tolerant alternative to Qiniu, which had previously encountered issues with self-healing. Through meticulous evaluation and experimentation, we sought to identify the most effective configurations for our Filecoin mining operations.

To ensure a comprehensive assessment, we utilised Vdbench, a powerful tool that stress-tests the cluster to its limits - unfortunately it is no longer being actively maintained and there are certain syntax bugs that are not well documented. Nevertheless, this allowed us to gather valuable insights into performance metrics and make informed decisions about the best configurations for our specific use case.

Made by Owyong Jian Wei